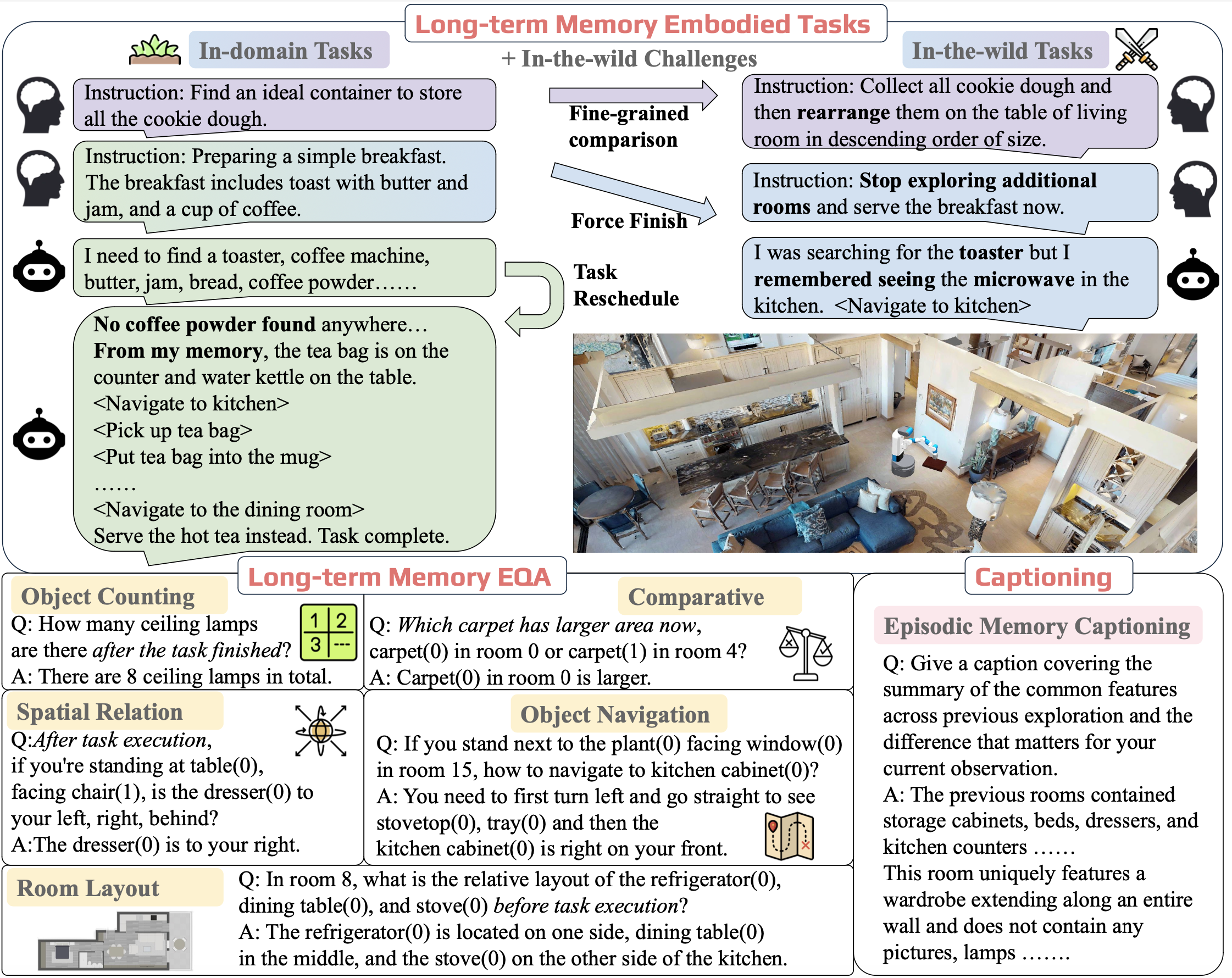

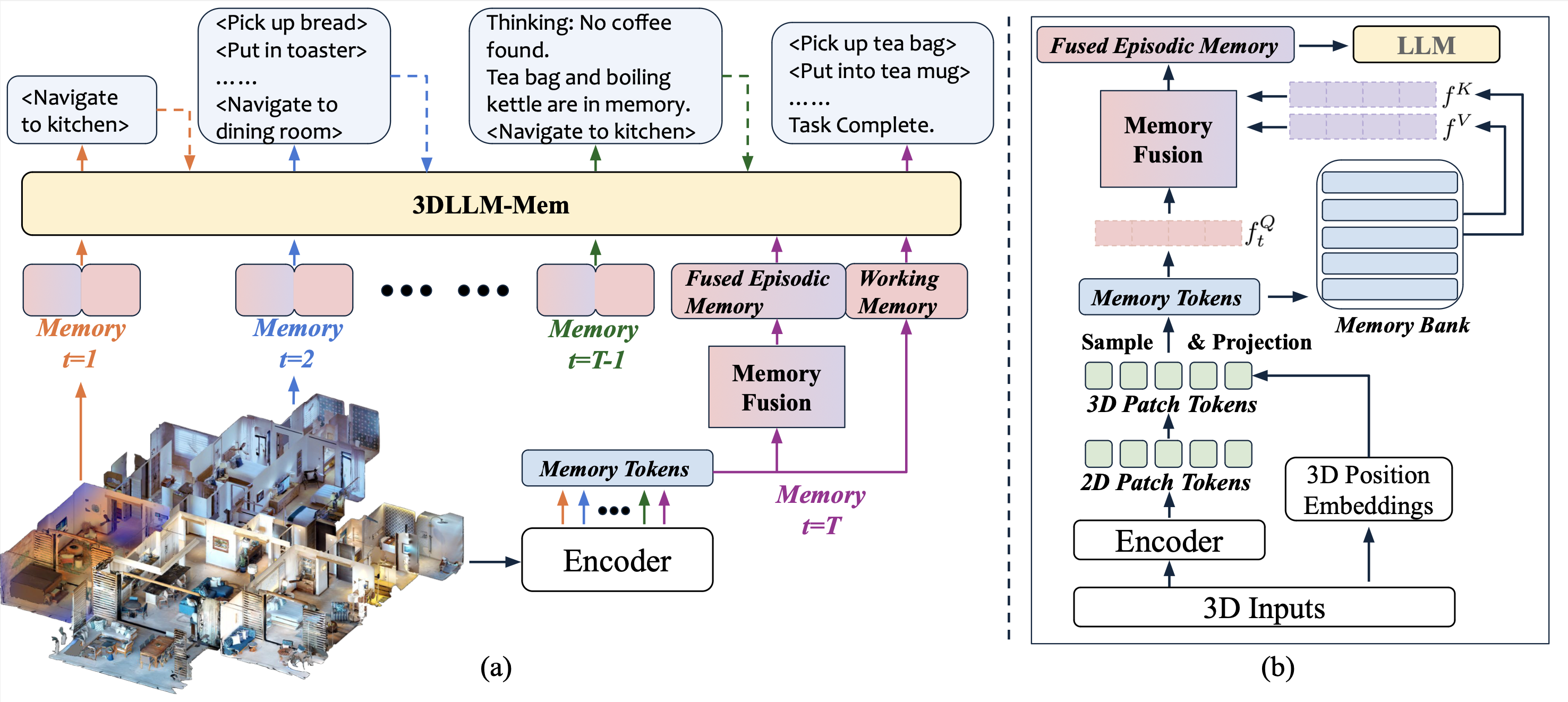

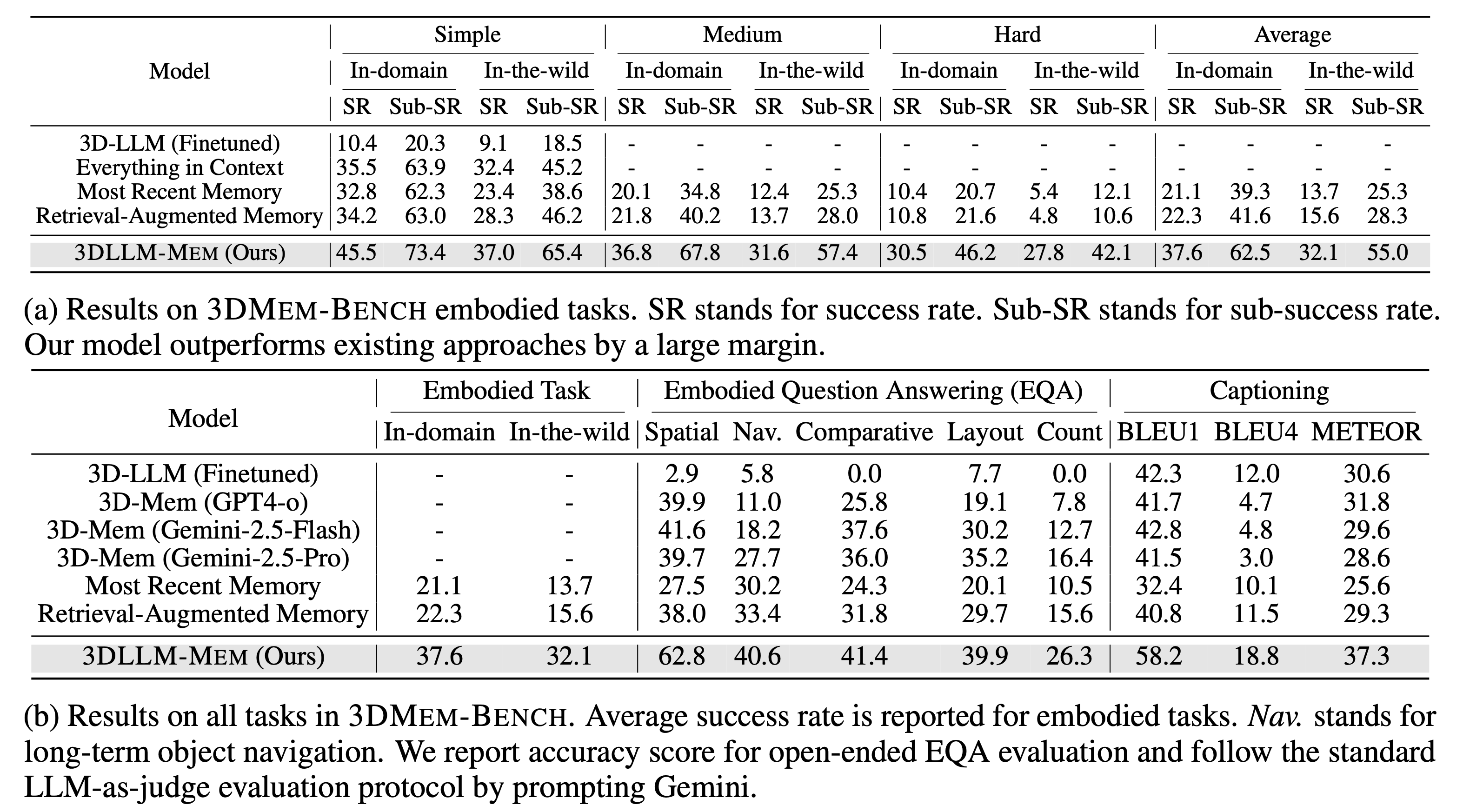

Humans excel at performing complex tasks by leveraging long-term memory across temporal and spatial experiences. In contrast, current Large Language Models (LLMs) struggle to effectively plan and act in dynamic, multi-room 3D environments. We posit that part of this limitation is due to the lack of proper 3D spatial-temporal memory modeling in LLMs. To address this, we first introduce 3DMem-Bench, a comprehensive benchmark comprising over 26,000 trajectories and 2,892 embodied tasks, question-answering and captioning, designed to evaluate an agent's ability to reason over long-term memory in 3D environments. Second, we propose 3DLLM-Mem, a novel dynamic memory management and fusion model for embodied spatial-temporal reasoning and actions in LLMs. Our model uses working memory tokens, which represents current observations, as queries to selectively attend to and fuse the most useful spatial and temporal features from episodic memory, which stores past observations and interactions. Our approach allows the agent to focus on task-relevant information while maintaining memory efficiency in complex, long-horizon environments. Experimental results demonstrate that 3DLLM-Mem achieves state-of-the-art performance across various tasks, outperforming the strongest baselines by 16.5% in success rate on 3DMem-Bench's most challenging in-the-wild embodied tasks.

Our 3DLLM-Mem agent begins by exploring the environment to locate an appropriate setup area—identified by the presence of chairs and medicine on the table. Leveraging its memory, the agent sequentially collect essential items: a band-aid, a water bottle, additional medications, and, a teddy bear for the child.

Agent's First-Person View

Third-Person View

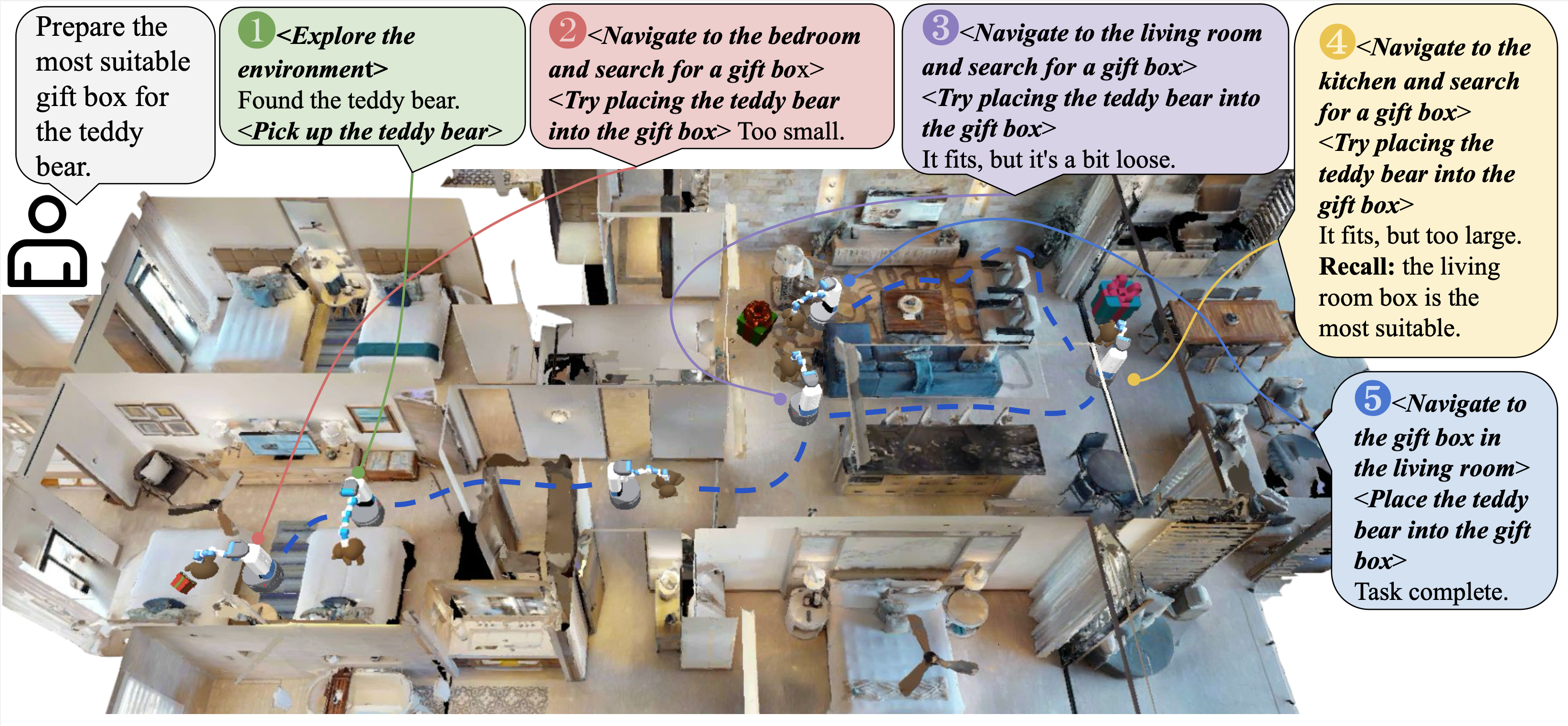

Our 3DLLM-Mem agent begins by locating the teddy bear. It then evaluates a gift box in the bedroom, navigates to the living room to inspect another, and finally searches the kitchen for a third option. After comparing all available gift boxes, the agent recalls its observations and selects the most suitable one—the box found in the living room.

Agent's First-Person View

Third-Person View

Our 3DLLM-Mem agent begins by placing a candle to set the mood, followed by arranging two plates. The agent then locates a wine bottle on the kitchen counter and places it on the table. Next, it prepares each serving: one slice of bread and an avocado for each plate. Finally, it completes the setup by placing a fork beside each plate, creating a thoughtful and well-organized breakfast setting for two.

Agent's First-Person View

Third-Person View

Our 3DLLM-Mem agent begins by freely exploring the environment, forming an initial memory. After receiving the task instruction, it recalls a book stored in a bedroom cabinet, navigates there, and retrieves it. The agent returns to the living room and places it book on the table. Since no other books come to mind, it resumes exploration and eventually finds a second book on the bed. This book is brought back and stacked atop the first. Finally, recalling a teacup previously seen in the kitchen, the agent retrieves it and places it on the table, completing the cozy reading nook setup.

Agent's First-Person View

Third-Person View

Our 3DLLM-Mem agent starts at its initial position and discovers the first basket and then explores the environment while finding each clothing item—leggings, tank top, tights, and turtleneck—hovering over them to evaluate suitability. Afterward, it returns to the location of the first basket, puts it down and picks up the second one, and repeats the comparison process. Concluding that the first basket is the most suitable, the agent returns to it.

Agent's First-Person View

Third-Person View

@article{hu20253dllmmemlongtermspatialtemporalmemory,

title={3DLLM-Mem: Long-Term Spatial-Temporal Memory for Embodied 3D Large Language Model},

author={Wenbo Hu and Yining Hong and Yanjun Wang and Leison Gao and Zibu Wei and Xingcheng Yao and Nanyun Peng and Yonatan Bitton and Idan Szpektor and Kai-Wei Chang},

year={2025},

eprint={2505.22657},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.22657},

}